ActuaryGPT

How large language models are shaping our future

June 2023Technology has always been a driving force behind the insurance industry’s evolution, enabling more efficient, accurate and customer-centric solutions. As the industry continues to adapt to the digital age, new technologies are emerging and paving the way for further advancements.

This article focuses on GPT-4, a large language model (LLM) developed by OpenAI, and its importance to the actuarial and insurance industries. First, we demonstrate the current real-world capabilities of GPT-4 in actuarial work and then discuss the strengths and potential pitfalls of actual use. We then explore GPT-4’s potential implications and the current Canadian regulation landscape related to GPT-4 and artificial intelligence (AI).

What Is GPT-4?

GPT-4 is a computer program created by OpenAI that can understand and generate text. It’s highly advanced and can do things like write stories, answer questions (from very simple to technical) and even pass tests meant for humans. GPT-4 is important for actuaries because of what it can do today and what it will be able to do tomorrow.

Today

GPT-4 can be a valuable actuarial tool, but widespread use is uncommon. By supplying precise data, generating and troubleshooting code, and assisting in forecasting, GPT-4 can enhance actuaries’ capabilities. Moreover, it enables actuaries to effectively convey their insights in an accessible and coherent manner tailored to their audience.

Tomorrow

GPT-4 and other AI programs will become even more sophisticated as technology advances. Going by the current rate of change, everything from model building to report and policy generation and end-to-end risk assessment automation is closer than most realize. This means that actuaries who stay up to date with the latest developments in AI will be better equipped to take advantage of these new capabilities and use them to improve their work.

Prompts for Continued Conversation With GPT-4

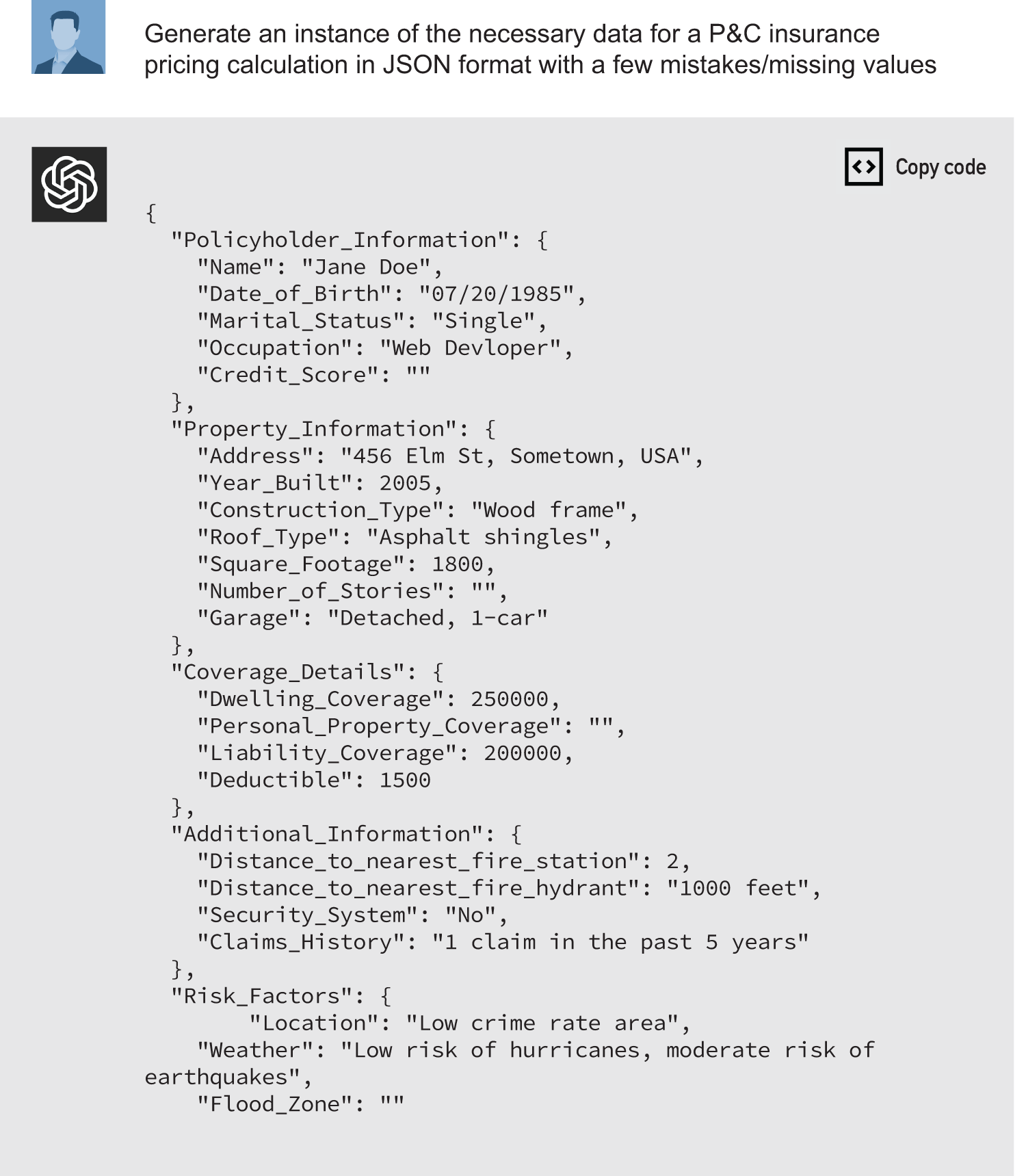

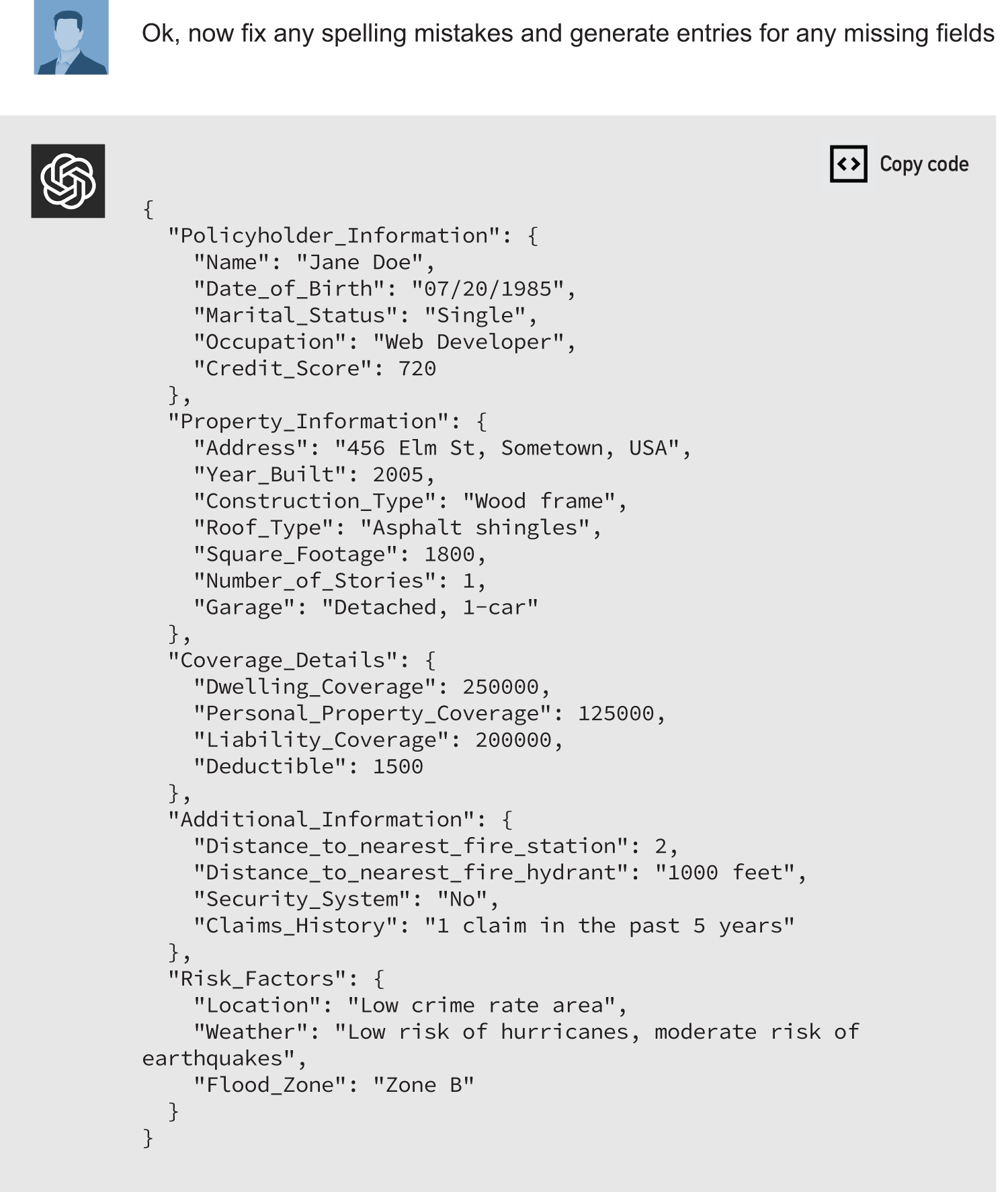

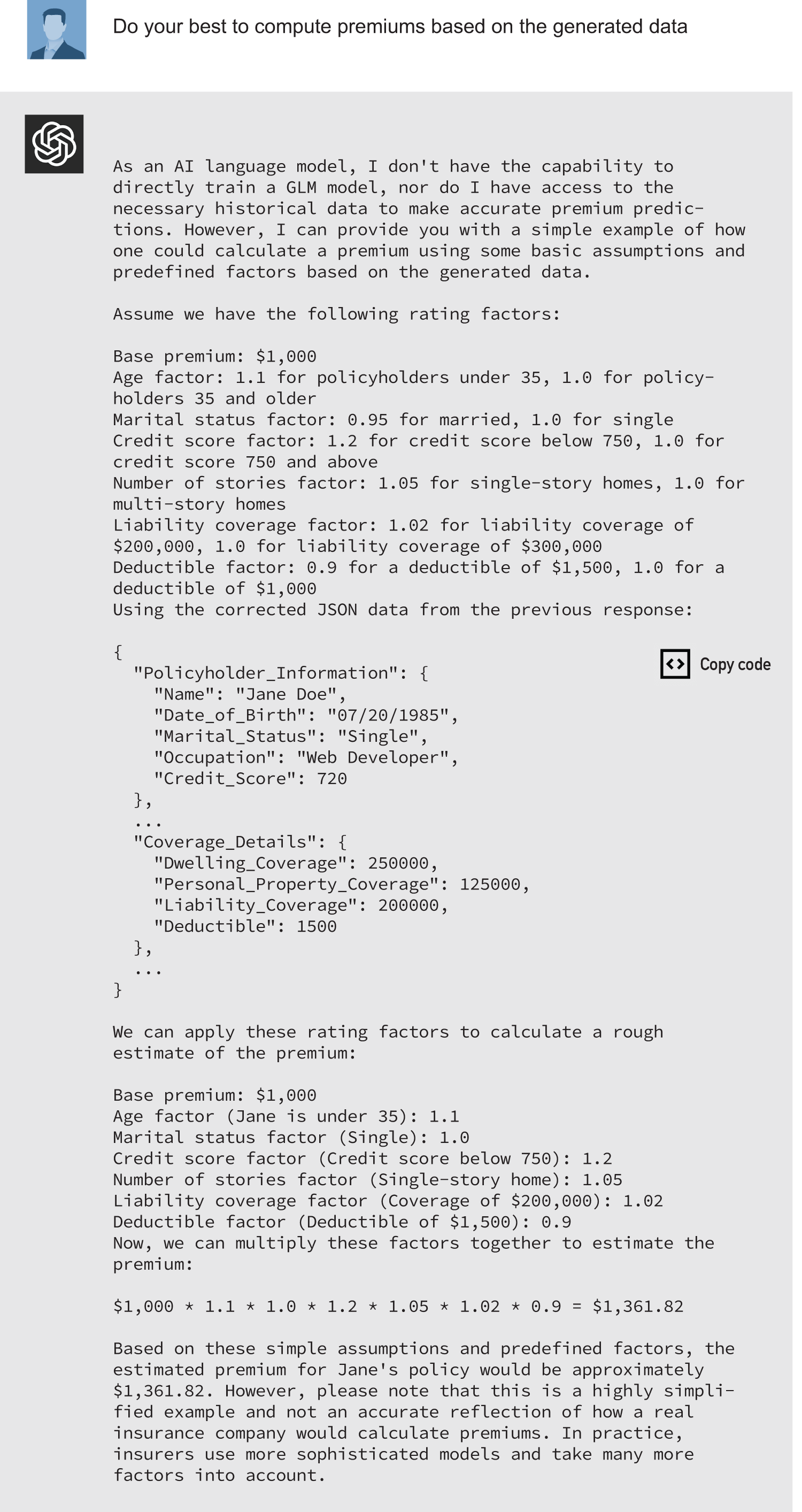

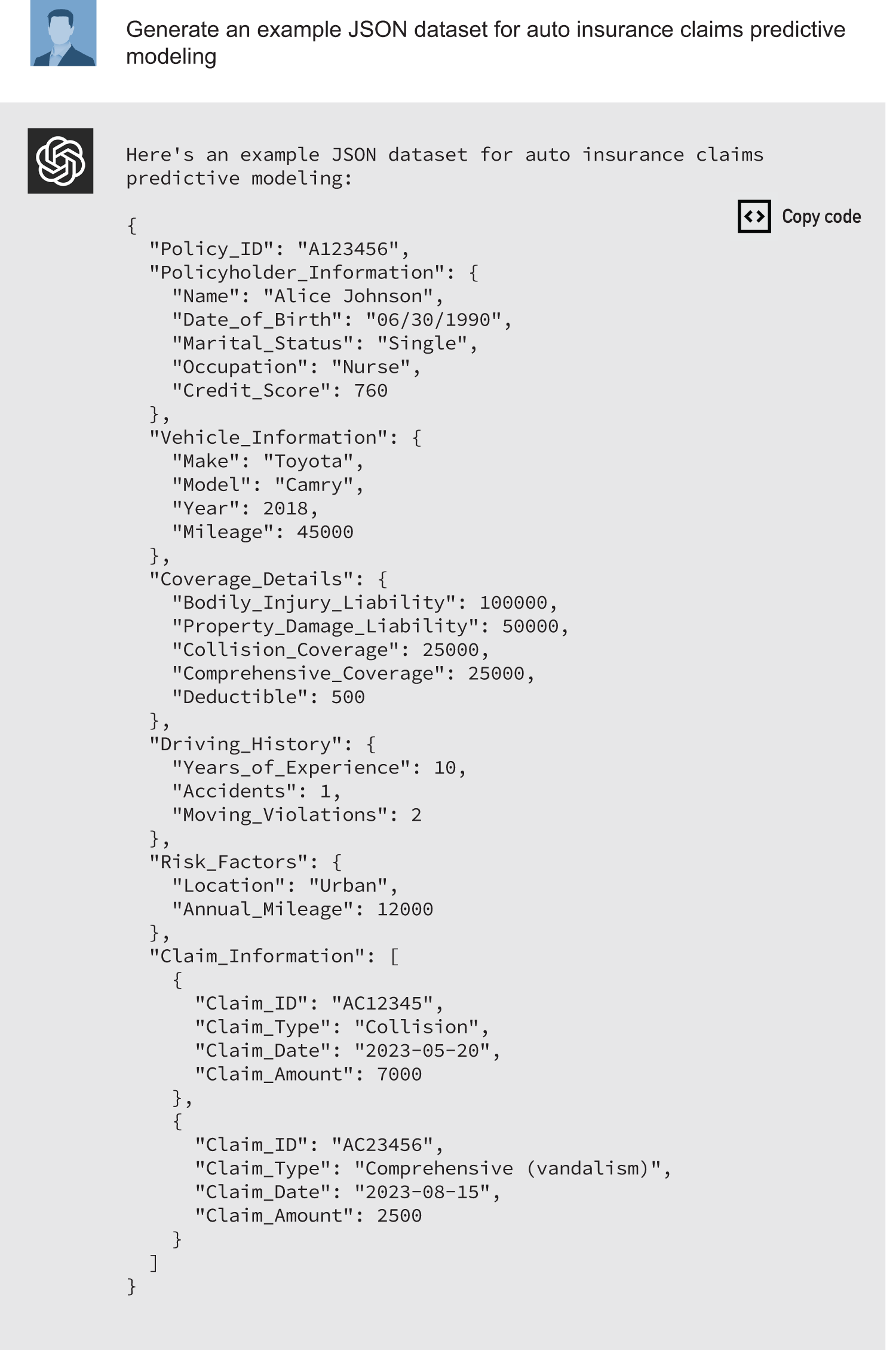

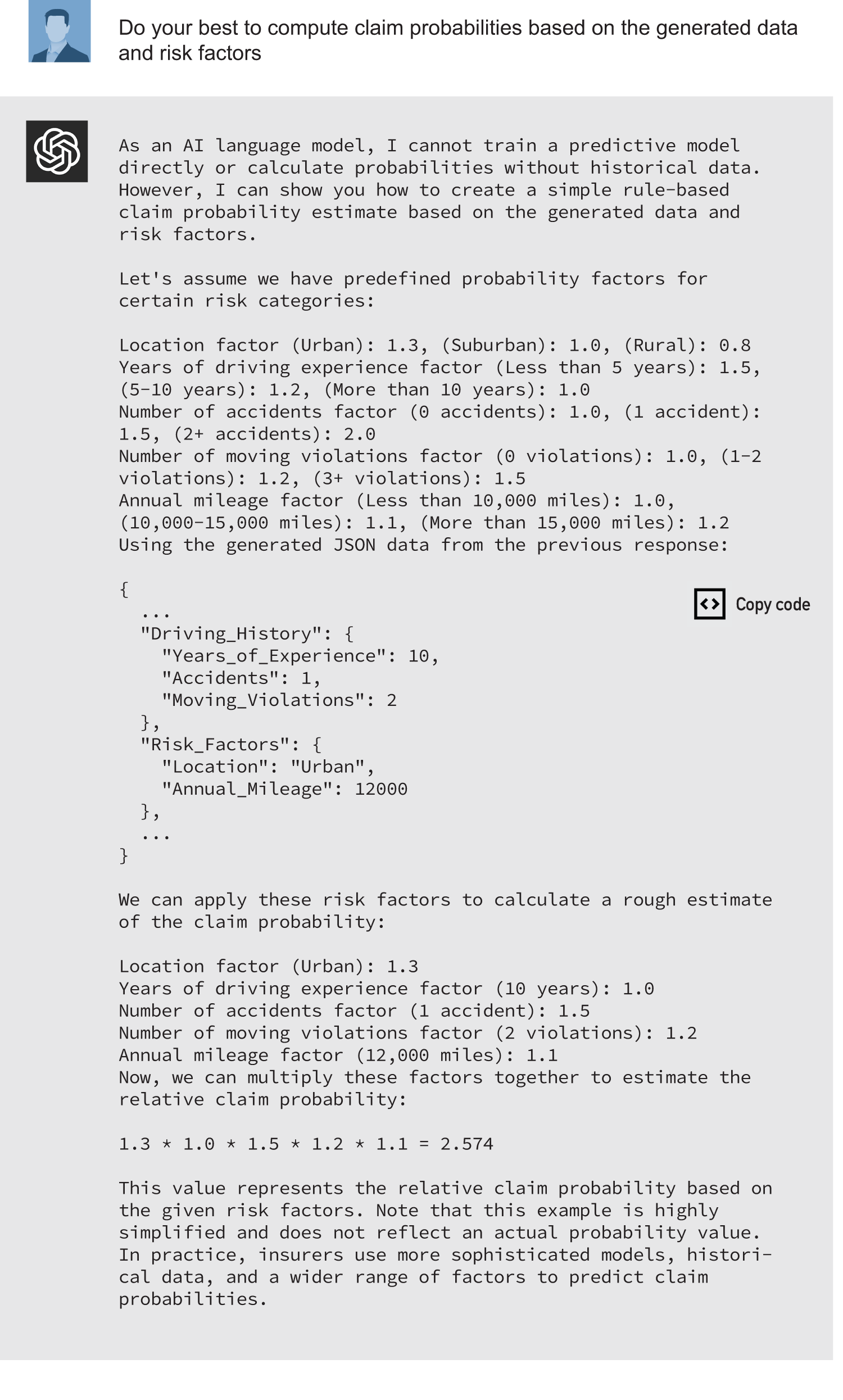

GPT-4 is capable of performing everyday actuarial tasks. Calculating premiums and estimating claim probabilities is vital to any firm’s business pipeline, and GPT-4 can be utilized in these tasks for both home and auto products. Figures 1 and 2 comprise a series of screenshots displaying the inputs and responses when using ChatGPT (the user interface that interacts with GPT-4) that demonstrate the underlying model’s ability to generate and clean necessary data sets. In addition, we see its attempt to compute a home insurance premium and an auto insurance claim probability based on the (synthetic) data it has created. While this exercise showcases GPT-4’s potential to aid actuaries in their work, it also highlights the current limitations of LLM-generated calculations and emphasizes the enduring importance of human expertise in developing and applying actuarial models. Finally, it is crucial to remember that this model was designed and trained to respond to and generate natural language. The computation is, at best, a secondary function.

Figure 1: Generating and Cleaning JSON Payload and Computing Pricing of Home Insurance Products

Figure 2: Predictive Claims Modeling of Auto Insurance Products

Our Conversation With ChatGPT

As we saw in Figures 1 and 2, GPT-4 (via the ChatGPT interface) demonstrated the ability to generate relevant data in a JSON request, clean the data and attempt to compute a home insurance premium and an auto insurance claim probability. While the computations are rudimentary at best, it’s worthwhile to point out a few takeaways from these demonstrations.

Strengths

During our conversation with ChatGPT, we noted some strengths of the technology.

- Speed and efficiency: GPT-4 can quickly (response time was maximum 20 seconds) and easily (the input was all but a few sentences) generate clean and processed data. This can save actuaries time and effort in the initial data preparation and analysis stages.

- Flexibility: GPT-4 can handle a wide range of input and output formats (we chose JSON just for readability) and adjust its approach based on the provided context and data structure, allowing for greater adaptability to diverse actuarial problems. Similarly, an actuary can translate their knowledge set (a predictive model) into something outside their typical domain experience (a JSON request).

- Ease of use and learning curve: GPT-4’s natural language processing capabilities make it incredibly user-friendly, as it can understand and execute tasks based on the simple text instructions a user provides. This approach, when fully capable, would significantly reduce the learning curve for actuaries, enabling them to leverage the model’s capabilities without extensive technical training or expertise in AI programming.

Pitfalls

We also observed pitfalls when using GPT-4.

- Limited accuracy: The computations GPT-4 performs are based on simple assumptions and predefined factors, which may not accurately reflect the complexities of real-world insurance pricing and claim probability estimation.

- Lack of domain-specific expertise: While GPT-4 is a powerful language model, it does not possess the domain-specific knowledge of an actuary, which is crucial for understanding and addressing the unique challenges of the insurance industry. Although it has pretty intermediate knowledge, it could be made expert by specific training data sets.

- Insufficient data: The AI-generated data sets may not contain sufficient historical data or the appropriate variables to perform accurate actuarial calculations, as the model cannot access proprietary databases or confidential information.

Possibilities

- Improved collaboration: GPT-4 could be employed as an assistant to actuaries, automating repetitive tasks, responding to requests and providing initial insights. As a result, the actuary could focus on more complex analyses and decision-making.

- Enhanced data management: AI models can help identify data quality issues, fill in missing values and detect anomalies, potentially improving the overall data management process and efficiency for actuaries.

- Advanced modeling techniques: By incorporating AI-generated insights and natural language processing capabilities, actuaries could develop more sophisticated models and pricing strategies that better reflect the ever-evolving risk landscape.

In conclusion, GPT-4 and similar AI models already offer promising, if nascent, practical opportunities for use in the actuarial profession.

The Future of GPT-4

The technology behind GPT-4 will be a source of great promise for the actuarial profession and the wider insurance industry in the coming months and years. As these cutting-edge LLMs continue to evolve and improve, upcoming advances in adjacent technology will allow LLMs to be localized, making them small and affordable enough to be housed on personal devices. This will create new possibilities and challenges regarding the collection of personal data both on a larger scale and with increased granularity.

Furthermore, GPT-4 will be able to leverage other LLMs and AI models to become a single source for various tasks—from computation to text, image and video generation. An example of this is HuggingGPT, also known as Jarvis,1 a Microsoft Research project that uses GPT-4 and other AI models to solve complex tasks in different domains and modalities, such as generating videos, summarizing articles, translating speech, creating logos, writing lyrics and more. Jarvis makes this possible by leveraging the language understanding of GPT-4 and the diverse expert models from HuggingFace. This represents a significant step toward advanced AI that can solve various problems with natural language as an interface.

As costs decrease, training and deployment time shorten, and output accuracy increases, the adoption levels of this technology are predicted to skyrocket, upending the current knowledge-work landscape while creating and destroying careers in the process. Furthermore, GPT-4’s potential to create, train and deploy other models with minimal human intervention makes the implications of this technology still largely unforeseen. These possibilities are succinctly displayed in Stanford’s recent development of an Alpaca model2 created for $600 and trained by ChatGPT.

GPT-4 is already capable of outperforming humans in most intellectual tasks. It can discover new drugs, generate games, ace the LSAT, GRE and bar exams3 and recently saved the life of a dog that veterinarians couldn’t help.4 Thus, individuals and organizations alike should identify and enhance the types of value they can provide in this new environment.

Potential Incoming Canadian Regulations and Considerations

As GPT-4 becomes more prevalent in the actuarial profession and insurance industry, it’s suggested that professionals stay informed about potential incoming Canadian regulations and considerations related to AI and data privacy. For example, Bill C-27, the Digital Charter Implementation Act, was introduced in the House of Commons last summer. If passed, this package of laws will implement Canada’s first AI legislation, the Artificial Intelligence and Data Act (AIDA), reform Canadian privacy law and establish a tribunal specific to privacy and data protection. The AIDA establishes Canadawide requirements for designing, developing, using and providing AI systems. It also prohibits certain conduct in relation to these systems that may result in serious harm to individuals or biased outputs.5

The impact of insurance industry oversight with respect to AI is significant due to the regulatory-heavy nature of the sector. While the focus is often on the technology itself, the regulatory framework surrounding AI has the potential to greatly affect both day-to-day operations and entire industries. One current and familiar example is personal auto rate regulation, where provincial regulators must approve the rates insurers charge. This existing and future AI-centric regulatory restriction would make it necessary for insurers to provide appropriate actuarial evidence before leveraging any advanced and novel technologies. GPT-4 certainly fits in that category. In any case, actuaries increasingly will need to ensure their work complies with regulatory stipulations when implementing GPT-4 and other AI solutions.

Where GPT-4 Should and Should not Be Used

When using GPT-4 in actuarial work, assessing the potential advantages against the ethical considerations and risks associated with this powerful tool is vital. This was covered more exhaustively in a previous article of ours.6 GPT-4 can enhance data analysis, risk modeling, automation and decision-making processes, but understanding its limitations and biases is critical.

Actuaries should not rely solely on GPT-4 or any LLM for complex or sensitive tasks, as outputs are heavily influenced by the training data and model structure. This can lead to biases or inaccuracies, which could lead to regulatory or business-outcome difficulties. To combat these risks, users should corroborate GPT-4-generated insights with other sources or expert opinions. Furthermore, ethical concerns regarding data privacy, transparency and security must be addressed to ensure that the model is applied responsibly. As has always been the case, actuaries should remain vigilant in safeguarding sensitive client information and adhere to strict privacy standards. By staying informed of AI research, collaborating with regulators and policymakers, and acknowledging these challenges, actuaries can harness GPT-4’s power while maintaining high professional and ethical standards in their field.

Conclusion

Actuaries have been in this position before. About a decade ago, with the proliferation of machine learning (ML) and AI in the profession, many forecasted a similar narrative: that actuaries would be forever affected by the new technology, and disruption was imminent. The exaggerated version of this did not come to pass, but there are many examples where actuaries use ML and AI in their day-to-day work.

Is There Anything Different This Time Around?

ML and AI were mostly theoretical for many actuaries a decade ago. The tangible model that would affect their daily work was still difficult to build. Adoption, as we saw it, was relatively slow due to a shortage of ML and AI talent and software in the industry, a lack of C-suite buy-in and lower computing power.

Over the past decade, these issues have been mostly resolved (and continue to improve). Many insurers have gone from minimal investment to, in some cases, teams of hundreds of data scientists and actuaries churning out models that leverage the cutting edge of ML and AI. In parallel, outside the insurance industry, the complexity of models has increased, resulting in powerful generative artificial intelligence (GAI) models being easily accessible to the everyday user—ChatGPT being an example.

The compounding effect of advances in the technology behind GPT-4 and similar tools is unprecedented, with progress now measured in weeks and days rather than years and months. Because of this, it’s almost impossible to accurately extrapolate what the future will look like based on previous technological paradigms. Instead, we are in novel territory, where the rate of progress is snowballing rapidly. With ease of access and relative affordability, our expectation is that the impact from LLMs—and more broadly GAIs—will manifest quicker than the decade-long journey it took for ML and AI to make significant impacts within insurance. Further, we anticipate that LLMs and GAI will become key driving forces for actuarial work within two years.

What Will Happen in the Next Decade?

Advances in ML and AI can’t be ignored, and the rapid enhancements in LLMs are no exception. At the same time, companies that were not able to capitalize on the newest innovations in ML and AI were not put out of business overnight. This kind of change takes time, and it’s more important for insurers to build these changes correctly than to build them quickly.

We expect that those insurers that can invest in growing their capabilities and business model through LLMs—and do it correctly—will see notable benefits. Insurers that more slowly adopt them will eventually be affected, but not overnight.

As actuaries navigate the ever-growing possibilities, we believe they must be aware of the potential challenges and limitations and proactively address them. With its ability to offer automation, streamlined risk assessment and decision-making insights, GPT-4 is a proverbial gold mine for the actuarial profession and the insurance industry. Accordingly, its continued development likely will play a critical role in shaping the future of these industries and the work performed within them.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries or the respective authors’ employers.

References:

- 1. @haroldmitts. “Jarvis HuggingGPT web demo,” YouTube, April 2023. ↩

- 2. Taori, Rohan, Ishaan Gulrajani, Tianyi Zhang, Yann Dubois, Xuechen Li, Carlos Guestrin, Percy Liang, and Tatsunori B. Hashimoto. Alpaca: A Strong, Replicable Instruction-Following Model. Stanford University. ↩

- 3. Jaupi, Jona. SMART-IFICIAL Human-defeating GPT-4 Breakthrough Sees AI Creating Drugs, Inventing Games, and Passing the Bar Exam in Seconds. The Irish Times, March 15, 2023. ↩

- 4. Gibbs, Alice. Man Details How GPT-4 AI Software Helped Save Dog’s Life. Newsweek, March 28, 2023. ↩

- 5. Medeiros, Maya, and Jesse Beatson. Bill C-27: Canada’s First Artificial Intelligence Legislation Has Arrived. Norton Rose Fulbright Canada, June 23, 2022. ↩

- 6. Jones, Harrison, and Andrew McLeod. The Future Is Now: How GPT-4 Will Revolutionize the Actuarial Profession. Canadian Institute of Actuaries, April 3, 2023. ↩

Copyright © 2023 by the Society of Actuaries, Chicago, Illinois.