Calculated Risk

Using structured scenario analysis for an effective operational risk management and stable capital requirements determination

December 2017/January 2018For financial institutions, operational risk (OpRisk, or the risk of a change in value caused by the fact that actual losses—incurred for inadequate or failed internal processes, people and systems, or from external events [including legal risk]—differ from the expected losses) might not be the most material risk from a capital perspective. However, recent events and history show that single OpRisk events may cause the bankruptcy of a financial institution or significantly deplete its capital base and reputation. The recent cases of Société Générale1 (rogue trader Jerome Kerviel lost $7.2 billion in unauthorized trading positions) and BNP Paribas2 (paid a $9 billion penalty for breaking U.S. money laundering laws by helping clients dodge sanctions on Iran, Sudan and other countries) demonstrate the potential damaging impact of OpRisk events from a capital and reputational perspective.

Moreover, many of the drivers of last decade’s subprime crisis can be traced to operational risk events, including mortgage frauds, model errors, negligent underwriting standards and failed due diligence combined with inadequate governance for new financial instruments. This crisis resulted in a dramatic increase in government debt to record levels and the rescue of several international financial sector participants, including the $182 billion bailout of AIG,3 the world’s largest life insurer at that time.

Despite the potential extreme impacts of OpRisk events, current practices suffer from significant issues hindering robust risk measurement and a fully effective risk management framework.

Issues With Operational Risk Measurement and Management

OpRisk management is frequently thought to be insufficiently driven by empirical loss evidence and dominated by qualitative management tools, such as risk and control self-assessment (RCSA), scenario analysis or key risk indicators (KRIs), often based mainly on expert judgments (see Figure 1). Many financial institutions often do not establish a statistical link between losses and capital requirements with such qualitative tools.

| Figure 1: Standard Operational Risk Management Tools | |

|---|---|

| RCSA | Risk and control self-assessment (RCSA) is the exhaustive evaluation of an organization’s risk and controls, in a categorical manner (traffic lights), which results in an overall risk map of the organization. |

| KRIs | Key risk indicators (KRIs) are measures of risk that are meant to act as an early warning of changes in the risk profile of an institution, department, process, activity and so on. Examples of KRIs include level of rotation of key personnel or number of corrections. |

| Scenario analysis | The detailed assessment of specific extreme scenarios, including how they develop until the OpRisk loss materializes, their potential effects and possible mitigation measures. |

| Loss data | The database storing all materialized risks that have led to economic losses or have nearly caused economic losses. There are internal losses that have materialized within the institution, and external losses that have materialized in other institutions and have been acquired or exchanged. |

In addition, OpRisk modeling often produces unstable capital estimates due to the lack of internal data and shape of the tail distribution (generally “fat” tail). The results then become highly dependent on the modeler’s choice of distribution and “non-validated” expert judgment.

Finally, these issues lead to the difficulty of linking the OpRisk modeling and capital calculations with potential mitigation actions. Unlike other risks, such as market, credit or insurance risks where exposures are linked to specific assets/liabilities, in OpRisk, exposures generally are linked to higher units of measurement and processes, such as business lines or even across business lines, making the link between specific action plans and risk measurement more difficult to establish.

In the following sections, we present a method and a framework to address these issues, namely structured scenario analysis (SSA) used in conjunction with a framework/tool seamlessly integrating internal and external data. This would lead to effective management of OpRisk and the calculation of reliable (stable) capital requirements.

STRUCTURED SCENARIO ANALYSIS FOR EFFECTIVE OpRISK MANAGMENT

Scenario analysis evaluates very specific and potentially severe OpRisk events and links them with the calculation of capital when limited internal loss data is available. However, scenario analysis is subject to significant cognitive biases that need to be mitigated to ensure the reliability of the results. Such biases include herding (the tendency to believe things because many other people believe them), anchoring (tendency to rely too heavily on the first piece of information available when making decisions), denial bias (situation in which experts might not feel comfortable answering questions about their potential losses or errors), confirmation bias (tendency to interpret, favor and recall information in a way that confirms one’s pre- existing beliefs or hypotheses), fear of looking unwise in front of peers/superiors, formal/natural leaders influencing the group, dissimilar risk skills across subject-matter experts (SMEs) and others.

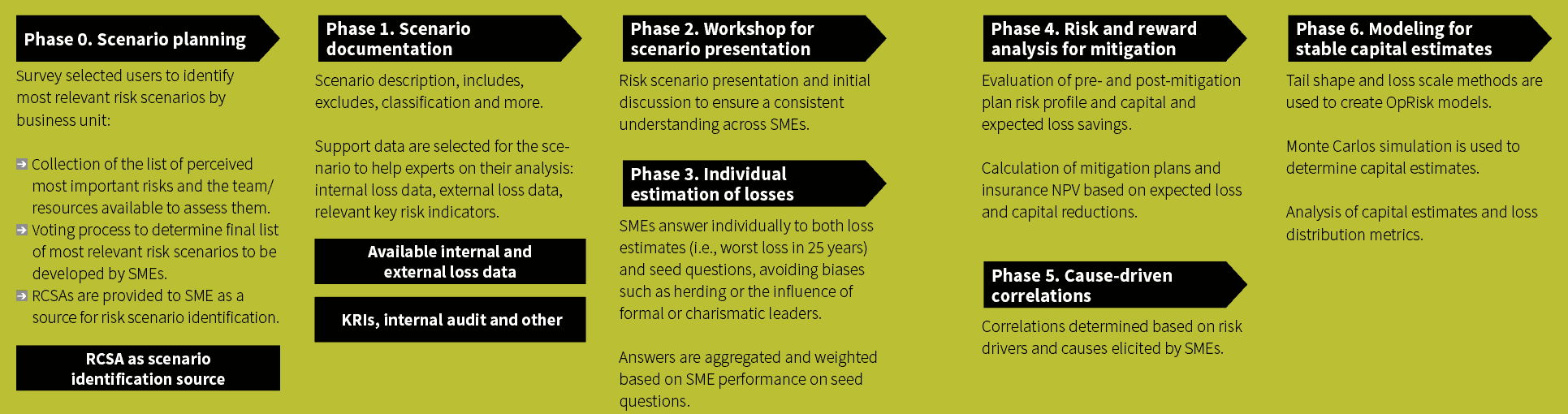

SSA is a statistically-driven method derived from the technology and engineering sectors that can significantly improve the quality of expert judgment-based risk assessments. It permits robust validation of risk assessments when little data is available and leverages the collective knowledge of SMEs to enhance the final evaluation. It is structured in phases to ensure an effective management of the biases (see Figure 2).

Figure 2: Structured Scenario Analysis Framework

Click to Enlarge

Source: Automated by OpCapital Analytics from The Analytics Boutique

Phase 0: In the initial phase, the main risk concerns among SMEs are identified, prioritized and selected for their detailed analysis and final modeling using specifically designed open and closed questions. RCSAs can be used as an initial source for identification of critical scenarios.

Phase 1: The risk scenario is documented with all relevant supporting data including internal (where available) and external data, related RCSAs, KRIs, internal audit reports, case studies and others. This helps to create the correct references and mitigate anchoring bias among SMEs, and it facilitates the use of analytical deduction rather than automatic answering by intuition.

Phase 2: This phase consists of a workshop where the scenario and all supporting data is explained to the SMEs to ensure consistent understanding of the risk scenario to be evaluated, which helps to mitigate confirmation bias.

Phase 3: SMEs provide their loss estimates individually (in the format of, for example, worst loss in five years, worst loss in 15 years, in 50 years and so on), rather than in a group workshop. Responding individually mitigates multiple biases including herding, fear of not looking knowledgeable, and the influence of natural or formal leaders.

The scenario questionnaire uses performance-based expert judgment methodologies, meaning that it includes seed questions (questions whose answers are known) to measure the skills and knowledge of responding SMEs. Seed questions are used to identify the SMEs who make better risk predictions.

Then, individual answers of SMEs are aggregated based on an SME’s performance on seed questions, with higher weighting on answers from those who demonstrate better predictions. In other words, this results in a single answer from multiple SME answers where the SMEs that performed better in the seed questions are given more weight in the aggregated answer.

In summary, SSA leverages collective knowledge by identifying the most qualified individual assessments and enriching these with other expert views, following a more scientific approach. It also provides traceable and transparent means for a robust justification and quality assurance of the evaluations of risk scenarios.

Risk and Reward Analysis: Risk Mitigation Investment Evaluation

Risk management is too frequently evaluated from the single-sided view of potential losses. However, a view of both risk and reward and the resulting trade-offs would increase the value add of the risk evaluation process. The risk and reward analysis is implemented (Phase 4) by analyzing both the current risk levels and their potential reduction given the introduction of new mitigation actions.

The consideration of mitigating actions in risk assessment also increases the value add for the financial institution as well as for the first line of defense, as it represents an opportunity to improve the processes they manage. It also mitigates denial bias, permitting a better disclosure of risk exposures.

The risk and reward approach can be implemented by analyzing the risk profile before and after the implementation of mitigation actions, leading to different capital and expected loss estimates. Capital and expected loss savings, together with the required investments for the mitigation action implementation (i.e., controls, automation and others), can be used to estimate the net present value (NVP) of the action.

The NVP of the investment required to support mitigating actions can be used to build the business case to justify the required investments to facilitate risk management decisions.

Cause-Driven Correlations for Determining Risk Dependencies

Scenario analysis correlations are difficult to calculate based on actual loss observations. Risk scenarios most frequently refer to very specific, low frequency extreme events, generally with few or no internal loss observations, making it impossible to determine correlations using internal loss data.

The use of external loss data can deliver a correlation matrix, thanks to potentially larger data sets. However, external data is a conglomerate of losses from diverse institutions, each with its own idiosyncrasies, making the calibration for a given financial institution challenging.

We propose the cause-driven correlation (CDC) method to improve the calculation of correlations. In this case, correlations are determined based on scenario sensitivity to major common risk factors (Phase 5), such as those in Figure 3.

| Figure 3: Pre- and Post-Mitigation Risk Profile | |

|---|---|

| Risk Factor | Description of the Dependency |

| Increasing business complexity | The increasing complexity and riskiness of the business, products and processes used to deliver them |

| Employees and work environment | Quality, ability, motivation and employees’ willingness to stay at the institution (retention) |

| External fraud environment and trends | The increasing intensity and sophistication of fraud environment and trends |

| Internal control environment and trends | The quality, intensity and effectiveness of the internal control and operational risk management environment |

| Regulatory trends | The increasing number of regulations, laws and corresponding penalties/fines |

| Technology environment, evolution and trends | The increasing sophistication and dependence on technology and the quality of the processes and infrastructure that supports it |

| Vendors and other external resources | The evolution in the quality and ability of the institution’s vendors and other resources |

| Volumes and market | The changing business volumes, macroeconomy and market evolution that put stress on the processes that support the business |

| Financial results of the institution | Sensitivity to the impact of financial results of the institution through the satisfaction of employees, availability of resources (investment and HR) and changes in the organization |

Source: Created using OpCapital Analytics so ware from The Analytics Boutique

The sensitivity of the risk factor to each scenario is determined by expert elicitation, where SMEs provide a percentage of influence to each risk factor per scenario. Then, using a correlation factor model, like those used in credit and market risk, the scenario cross correlations can be derived from the scenario sensitivity to these risk dependency factors.

CDC presents multiple advantages: intuitive correlations (fully explained by the common factor sensitivity across scenarios, when limited data is available); linking correlation analysis with the institution’s potential weaknesses and additional insights into effective mitigation; and SMEs are more aware of the risks, causes and dependencies across risks.

Modeling for Stable Capital Estimates

Stability of capital estimates is a critical issue in OpRisk capital modeling. It results in steady resource allocation and provides credibility to the capital modeling process.

However, the capital calculation for OpRisk is often volatile and unstable as we try to model, with a limited amount of data, the shape of the tail distribution, which is often assumed to be fat tailed. It is therefore crucial to be able to incorporate efficiently all the information available.

In this context, we have developed a framework/tool to integrate seamlessly these multiple sources of information (internal loss data, external loss data and scenario analysis) to provide a more robust and stable estimation of the loss distribution.

We propose the scale and tail shape (STS) method,4 which directly addresses the volatility of tail shapes due to lack of data (Phase 6). Using STS, the volatility of capital estimates can be significantly reduced, in many cases by up to 80 percent.

Under the STS, external loss data, in conjunction with SSA, is used to determine the tail shape. This can be done by analyzing kurtosis and skewness from external loss data. Alternatively, external loss data can be used to estimate the actual tail parameter of fat tail distributions. In addition, loss estimates provided by SMEs are used for determining the scale of the institution’s losses. External loss data is more abundant on extreme tail losses and can provide a more accurate picture of the tail behavior of extreme losses.

Both the loss estimates from experts—representing the scale of the institution losses—and the kurtosis and skewness obtained from external loss data analysis—representing the distribution’s tail shape—are set as fitting targets for finding a distribution that matches such scale and tail shape.

The use of STS to model OpRisk scenarios results in a stable capital estimate facilitating the use of capital models in the institution’s operational and business planning, providing credibility to the modeling process.

Conclusion

It is possible to obtain a stable and robust calculation of OpRisk capital estimates and use these estimates to identify the needed, specific and effective risk management actions. The use of performance-based expert judgment techniques and integration with other sources of information (e.g., internal loss data, external loss data, etc.) makes the loss estimates from SMEs more robust and trusted. This results in evaluation of very specific risk events that can be linked to specific mitigation actions. SSA incorporates these benefits using a scientific and practical approach.

References:

- 1. Iskyan, Kim. “Here’s the Story of How a Guy Making $66,000 a Year Lost $7.2 Billion for One of Europe’s Biggest Banks.” Business Insider. May 8, 2016. ↩

- 2. Thompson, Mark, and Evan Perez. “BNP Paribas to Pay Almost $9 Billion Penalty.” CNNMoney. July 1, 2014. ↩

- 3. Kaiser, Emily. “After AIG Rescue, Fed May Find More at Its Door.” Reuters. September 16, 2008. ↩

- 4. Cavestany, Rafael, Brenda Boultwood, and Laureano Escudero. Operational Risk Capital Models. London: Risk Books, 2015. ↩